People sometimes wonder why Video Voice has no preprogrammed models of target sounds and words. There are actually very good reasons for that.

To start with, we don't know what targets any individual needs to work on. Consider the number of words that exist. In the English language alone, there are at least a quarter of a million words, according to the Oxford English Dictionary. And that's just English. Video Voice's displays are language-independent, and can easily be used for speech therapy in Spanish, French, Arabic or most any other language.

Even if we were to put together libraries of target models, there are other issues. There are usually distinct differences in male vs. female, adult vs. child voices. It's difficult-to-impossible to strip out the pitch elements from sounds, so a man may not be able to match a woman's model, nor a child an adult's voice.

Then there's the matter of dialectical differences. What constitutes the “correct” production of any sound? In the northeast part of the U.S. a word like “bet” is pronounced with a short vowel (“beht”). In the south, the vowel is often elongated to a diphthong, e.g., “bay-uht.” Which way is the right way? Well, that really depends on where you're living, doesn't it? To Video Voice, however, those two pronunciations won't "look" the same.

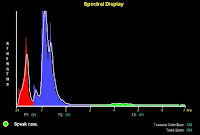

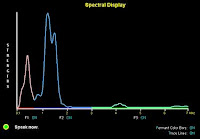

In the F2/F1 Formant Matrix representation, you can see the addition of the extra vowel sound in the red pattern ("Bay-uht"). The "ay" appears higher and more toward the left of the screen space. The durational differences in the sounds aren't strongly noticeable in this display, although the changes in the vowel sounds are.

If you switch to the F2/F1 Temporal representation for a cross-time view of the productions, the longer duration of "Bay-uht" is obvious. In both cases, you can see why the two words don't look the same to Video Voice, and why pre-programming models for use could end up being frustrating for users.

Model Libraries

Although there are no built-in models, Video Voice does provide a structure in which you can assemble your own sets of target models for your caseload, creating your very own model library. Once you’ve defined and stored them, they’re available for repeated use, or for transfer to other folders.

Within the Authorized User operating mode, each therapist can have up to 255 folders. Each folder can contain up to 255 models. That's a total of 65,025 separate models, more than you’re ever likely to need. (I sure hope you don't have 255 individuals in your caseload!) Video Voice will allow as many as 255 separate therapist folders, which means you could have as many as 16,581,375 models stored, but I think we can agree that's just plain silly.

So let's be serious. This caseload structure means you can easily build libraries of models, which may be useful if you’re a school-based therapist, especially if a number of your students have similar speech problems, such as articulation of /r/. By building a library of models featuring that sound in different positions, you’ll have a source for targets that you can use to set up directories individualized for each student. And then you’ve got a therapy tool customized to your particular needs.

How do you go about this? Start by setting up a Therapist folder to contain the library, giving it a name such as MODLIB. Then, within that folder, define a caseload folder with a name like R Sounds. Activate the R Sounds folder with New Session, then use Formant Create to make a series of models with R: run, race, round, etc. (Make sure you clearly enunciate/stress the R during production so that it will be clearly visible in the patterns.)

You can repeat the process for S Sounds, Vowel Sounds, or whatever other targets you commonly work with, until you have a series of MODLIB folders containing your models.

Once you have built your library, you can transfer copies of any target models to any student's folder. Start by activating the desired student's folder with New Session. Then, go to the Data Management Copy Data function. Specify your MODLIB folder as the target source, and select and move desired models into the student's destination folder. Easy!

If you have students who share common therapy goals or articulation problems, you can also use this same strategy with their own data folders, treating them as a source for targets. Model libraries can streamline setup time, especially if you have a large caseload.

A final note. During therapy, consider library models to be only starting points. It’s

always easiest to match your own voice, so when a student successfully produces a target, it’s a good idea to turn his voice pattern into the model. All it takes is a single click of the Replace (or SaveAs) button.

Model libraries are a good way to maximize your productivity with Video Voice and customize activities for everyone in your caseload. Why not give it a try?

Video Voice Support Team

1-800-537-2182

mv@videovoice.com

http://www.videovoice.com/